Docker is a platform that enables developers to build, deploy, and run applications in containers. Containers are lightweight and portable environments that encapsulate application code and dependencies, allowing for easy deployment and scaling across different environments.

Docker provides a standardized way to package applications into containers, which can run on any platform that supports Docker. This allows developers to build and test applications in a consistent environment, and then deploy them to production without having to worry about differences in the underlying infrastructure.

Using Docker, developers can create isolated environments for different components of an application, such as a web server, a database, and a messaging queue. Each component can be packaged into its own container, making it easy to scale and manage each part of the application separately.

Docker has become a popular tool for building and deploying applications in modern cloud-native architectures and is used by many companies and organizations around the world.

What is container

It is an isolated environment with a package application with all necessary dependencies and configurations. It is very portable. It is easily shared between the development and operations teams. Because of portability, it makes development and deployment more efficient.

Where do containers live

Containers live in the container repository or companies have their own private repository. Where they host or store all containers. There is also a public repository Docker Hub. where you store and push your own containers. Docker also has Enterprise Edition with more features specific to enterprises.

What is Docker Image

A Docker image is a lightweight, standalone, executable package that includes everything needed to run a piece of software, including the code, runtime, system tools, libraries, and settings. Docker images are built from a set of instructions called a Dockerfile, which describes how to build the image step by step.

When a Docker image is created, it is stored in a container registry, such as Docker Hub, where it can be easily shared and downloaded by others. Once an image is downloaded, it can be used to run a container, which is an instance of the image that is isolated from the host system and other containers.

Images are designed to be portable and easily shared between different environments, making it easy to deploy applications in different environments without worrying about dependencies or configurations. This allows developers to build once and deploy anywhere, simplifying the process of deploying applications to production and reducing the risk of errors due to differences in environments.

In summary, a Docker image is a packaged and portable version of an application that can be easily shared and deployed as a container in any environment that supports Docker.

Types of Docker Images

There are three types of Docker Images available on Docker Hub.

Docker Official Image

It is created by Docker open source and "drop-in" solution repositories

Verified Publisher

High-quality images from publishers verified by Docker. These products are published and maintained directly by a commercial entity. These images are not subject to rate limiting.

Sponsored OSS

Docker Sponsored Open Source Software. These are images published and maintained by open-source projects that are sponsored by Docker through our open source program.

Key Benefits of Docker

There are several benefits of using Docker over traditional ways. In software development and deployment, including:

Portability: Docker images are self-contained and portable, which means that they can run on any platform that supports Docker, making it easy to move applications between different environments without worrying about dependencies or configurations.

Efficiency: Docker uses a shared kernel, which means that containers are lightweight and consume minimal resources compared to virtual machines. This allows developers to run multiple containers on the same host without impacting performance or stability.

Consistency: Docker provides a consistent environment for building, testing, and deploying applications, which reduces the risk of errors and improves the reliability of the application.

Isolation: Docker containers are isolated from the host system and other containers, which improves security and reduces the risk of conflicts between applications.

Scalability: Docker makes it easy to scale applications by allowing developers to deploy multiple instances of a container on different hosts or on the same host.

Flexibility: Docker can be used with a wide range of tools and technologies, including orchestration tools like Kubernetes, making it easy to integrate with existing infrastructure and workflows.

Overall, Docker provides a powerful and flexible platform for building, deploying, and managing modern applications, and is increasingly popular in the software development community.

Docker Vs VM

Every Operation System has Three Layers. First is Hardware Layer, OS Kernal layer, and Application Layer. Docker and Virtual Machines both are virtualization tools. Docker Virtualise only Application Layer. On the other hand, Virtual Machine virtualizes the OS Kernal and Application Layer.

Size: The size of the docker image is much smaller than VM Images. Docker images are in Megabytes but VM Images are in Gigabytes.

Speed: Docker containers start and run much faster than VM because VM has to boot the application kernel and then load applications on top of it.

Compatibility: VM of any OS can run on any host. Because Docker containers are host os specific. But By Using Docker Toolbox you can run docker natively from other host platforms where docker is not a supported example. Windows 8,7 for Windows 10 you can use Linux Subsystem to run Linux-based containers.

What is Hypervisor?

A hypervisor, also known as a virtual machine monitor, is a type of software or firmware that creates and manages virtual machines (VMs) on a physical host machine. The hypervisor acts as an intermediary between the physical hardware and the VMs, providing a layer of abstraction that enables multiple VMs to run on the same physical machine without interfering with each other.

There are two main types of hypervisors:

Type 1 (or bare-metal) hypervisors run directly on the physical hardware of a host machine and manage VMs without requiring an underlying operating system.

Type 2 hypervisors run on top of an existing operating system and create VMs as processes within the host operating system.

Docker Desktop: It used a Hypervisor Layer with a lightweight Linux distro. For Windows and Mac Operating Systems. Because initially, Docker was made for Linux-based operating systems.

What is Docker Architecture?

The Docker architecture consists of several components that work together to enable the creation, deployment, and management of Docker containers. These components include:

Docker Engine: This is the core component of the Docker architecture and provides the runtime environment for Docker containers. It includes a daemon that runs on the host machine and a command-line interface (CLI) for managing containers and images.

Docker Hub: This is a public container registry where users can find, store, and share Docker images. Docker Hub also supports private registries for secure storage and distribution of Docker images.

Docker Client: This is the command-line interface that allows users to interact with the Docker Engine and manage Docker containers and images.

Docker Compose: This is a tool for defining and running multi-container Docker applications. It allows developers to define the different services that make up an application and how they should be configured and connected.

Docker Swarm: This is Docker's native orchestration tool for managing clusters of Docker hosts and deploying and scaling Docker services across them.

Dockerfile: This is a text file that contains a set of instructions for building a Docker image. Docker images are created by running the instructions in a Dockerfile and can be stored and distributed as a single file.

Overall, the Docker architecture is designed to be modular and flexible, allowing users to build and deploy containerized applications in a variety of environments and configurations.

How does Docker Container work?

On Top of the Host OS, there is a Docker Engine layer that manages the Life cycle of the Docker Container. All the docker containers run on top of the Docker engine.

Installation of Docker on AmezonLinux

yum install docker -y

Note: Installation of docker is different as per the operating system and version of the operating system.

To check whether docker is perfectly installed or not run the docker version command.

docker --version

If you get appropriate like Docker Version 20.2.16 means docker install successfully.

Main Docker Commands

docker pull

The docker pull command is used to pull images from the docker repository or docker hub.

docker pull ubantu

#to download a latest tag image

docker pull ubantu:18.04

#to download ubantu 18.04 version image

docker images

To see all downloaded images in list form.

docker images

docker image ls

Note: Both commands work exactly the same.

docker run

It creates a container from the image. if the image is not downloaded then it pull from the docker hub and creates the container.

docker run ubantu

docker run command comes with many attributes.

--name to give a name to the container

-d to run the container in detached mode

-i to run the container in interactive mode

-t to get terminal access to the container

docker run ubantu -itd --name dev-server

# ubantu container running in detach mode with name dev-server

docker run -it ubuntu:18.04 bash

In this case, it opens the interactive terminal of bash inside the ubuntu:18.04 container. It is a foreground approach.

ctrl+d Used to exit from the container and stop the container

ctrl+pq Used to exit from the container without stopping the container

docker ps

To check the running container status

docker ps

docker ps -a

#to check all containers status (running, stoped, exited,..)

docker rmi

It is used to delete image.

-f used to delete image forcefully.

docker rmi imagename:tagname

docker rmi -f imagename:tagname

docker search

Used to search docker images on the docker repository.

docker search imagename

docker rm

To delete exited or stop container in docker. There are two ways to use the docker rm command.

#1st way

docker rm containerID/containerName

#2nd way

docker container rm containerID/containerName

docker prune

To delete all exited and stopped containers and unused images.

docker system prune -a -f

docker stop

To stop the container the running container

docker stop containerID/containerName

docker start

To start the stopped container.

docker start containerID/containerName

Going inside the docker container

docker attach

To login to the docker container via the docker command which is running.

docker attach containerID/containerName

It will execute a command in the container, create a new terminal, and login

docker exec -it contID/contName bash

docker kill

It stops containers. By forcefully stopping the main process inside the container. With exit code 137.

docker kill containerID/containerName

docker stats

To check the statistics of all containers

docker stats

#to check stats of particular conatiner

docker stats containerID/containerName

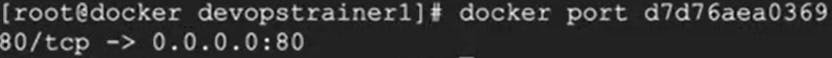

docker port

To see all are active ports or used ports of docker containers

docker ports [Container-Name/ID]

docker inspect

To check more details of the container like ip

docker inspect

docker info

To check the docker installation details

docker info

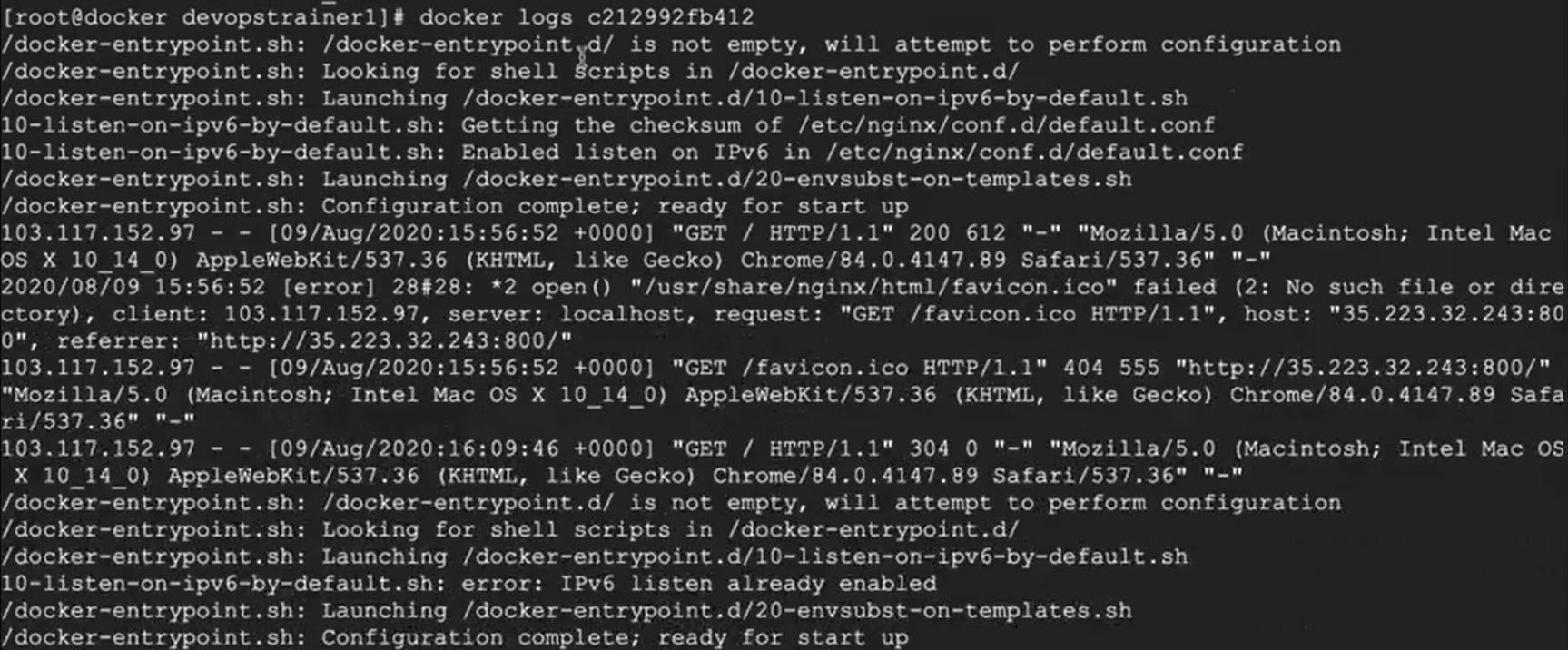

docker logs

To see docker logs. It shows what happened inside the containers.

docker logs [container-name/ID]

docker logs -f [container-name/ID]

#To see contineous logs of the container

To upload a custom docker image on the docker hub

docker login

To login on Docker Hub from docker CLI.

docker login

docker commit

To create an image from a running container.

docker commit contID/contName [username-dockerhub]/[custom-image-name]:tagname

docker push

To upload a custom-created image to the docker hub.

docker push [dockerhub-username]/[custom-image-name]:tagname

Docker Port binding or Port Forwarding

To bind the docker container port to the machine/server port. In this case 90 of the ec2 instance bind with port 80 of the docker container. On the left side of the port is your server or instance port and the right side of the port is the docker container port.

Note: you can run an n-number of containers in a single machine or instance. Docker containers are running isolated. Each container has its own unique existence.

docker run -itdp 80:80 httpd

docker run -itdp 90:80 httpd

Docker Copy Files

To copy file/files from the local machine/node to the docker container

docker cp /path/[file-name.txt] contID/contName:/path-docker-container-folder

To copy file/files from the docker container to the local machine/node

docker cp contID/contName:/path/filename /ec2-instnace-path

Docker Volumes

The preferred method for storing data produced by and used by Docker containers is through volumes. Bind mounts are reliant on the directory setup and OS of the host machine, whereas Docker manages volumes entirely.

Advantages of Docker Volumes

Bind mounts are more difficult to transfer or backup than volumes. Using the Docker API or the Docker CLI, you may manage volumes. Both Linux and Windows containers support volumes.

Shared volumes between numerous containers can be done more securely.

You can store volumes on distant hosts or cloud providers, encrypt the data inside of volumes, and add additional functionality with volume drivers.

A container can pre-fill the content of new volumes. Performance-wise, Docker Desktop volumes perform substantially better than bind mounts from Mac and Windows hosts.

A volume does not increase the size of the containers that use it, and the contents of a volume live outside the lifespan of a particular container, making volumes a frequently preferable option to persisting data in a container's writable layer.

To create a docker volume

docker volume create volume-name

To see created list of volumes

docker volume ls

To see details of the volume

docker volume inspect volume-name

To delete the Volume

docker volume rm volumename

docker run -itdv vol1:/devops ubuntu:18.04 bash

Here we are using the existing docker volume vol1 to attach it to the docker container. And we created a folder called /devops in the location. We are attaching it to our docker container.

Docker Bind Mount

To mount the local machine's physical folder location with the container folder.

docker run -itdv /mnt:/devops ubuntu:18.04 bash

Docker Network

Docker provides a powerful networking feature that allows containers to communicate with each other and with the outside world. When you create a Docker container, it is connected to a network that facilitates this communication. In this context, a Docker network is a virtual network that enables containers to establish connections and exchange data.

Docker provides three types of networks by default:

1. Bridge Network: This is the default network created by Docker when you install it. Containers connected to the bridge network can communicate with each other using IP addresses. Docker also provides DNS resolution for container names on the bridge network.

2. Host Network: When a container is connected to the host network, it shares the network stack with the Docker host machine. This means the container uses the host's network interfaces directly, and it can access network services running on the host using "localhost." It is useful when you want to expose container services on the host's network without network address translation (NAT)

3. Overlay Network: Overlay networks enable communication between containers running on different Docker hosts. This is particularly useful in distributed environments or when using Docker Swarm mode for container orchestration.

In addition to the default networks, Docker also allows you to create custom networks to suit your specific requirements. Custom networks provide isolation and segmentation between containers, allowing you to control how they communicate and share data.

To create a custom network in Docker, you can use the `docker network create` command and specify the desired network type. For example:

docker network create mynetwork

This command creates a new bridge network named "mynetwork." You can then connect containers to this network using the `--network` flag when running the `docker run` command

To run containers on the network:

- When running containers, you can specify the network they should be connected to using the `--network` flag with the desired network name. For example:

docker run --network mynetwork --name container1 image1

docker run --network mynetwork --name container2 image2

- This will run two containers named "container1" and "container2" on the "mynetwork" network.

3. Communicate between containers on the same network:

Containers connected to the same network can communicate with each other using their container names or IP addresses.

For example, if "container1" wants to communicate with "container2," it can use the container name as the hostname:

ping container2

- Alternatively, you can use the IP address of the target container to establish communication.

4. Connect containers to the external network:

By default, containers can access the external network through NAT provided by the Docker bridge network.

If you want containers to be directly accessible from the external network, you can use the `--publish` or `-p` flag when running the container to map container ports to the host's ports:

docker run --publish 8080:80 --name webapp nginx

- In this example, the container's port 80 is mapped to the host's port 8080, allowing external access to the web application running inside the container.

5. Explore network configuration:

To view information about the Docker networks, you can use the `docker network ls` command, which lists all the available networks.

To inspect a specific network and see its details, you can use the `docker network inspect` command followed by the network name or ID.

6. Remove a network:

To remove a Docker network, ensure that there are no containers connected to it.

You can use the `docker network rm` command followed by the network name to delete the network:

docker network rm mynetwork

These steps provide a basic overview of using Docker networks. Depending on your requirements, you can further explore advanced network configurations, such as using network aliases, attaching containers to multiple networks, or integrating with third-party network plugins to enhance your container networking capabilities.